Note

Click here to download the full example code

Multi-Basis Encoding

Multi-Basis Encoding [1] (MBE) quantum optimization algorithm for MaxCut using TensorLy-Quantum. TensorLy-Quantum provides a TT-tensor network circuit simulator for large-scale simulation of variational quantum circuits in a Pythonic/PyTorchian interface with full Autograd support similar to traditional PyTorch Neural Networks.

[1] T. L. Patti, J. Kossaifi, A. Anandkumar, and S. F. Yelin, “Variational Quantum Optimization with Multi-Basis Encodings,” (2021), arXiv:2106.13304.

import tensorly as tl

import tlquantum as tlq

from torch import randint, rand, arange, cat, tanh, no_grad

from torch.optim import Adam

import matplotlib.pyplot as plt

#device = 'cuda' # Use GPU

device = 'cpu' # Use CPU

nepochs = 40 #number of training epochs

nqubits = 20 #number of qubits

ncontraq = 2 #2 #number of qubits to pre-contract into single core

ncontral = 2 #2 #number of layers to pre-contract into a single core

nterms = 20

lr = 0.7

### Generate an input state. For each qubit, 0 --> |0> and 1 --> |1>

state = tlq.spins_to_tt_state([0 for i in range(nqubits)], device) # generate generic zero state |00000>

state = tlq.qubits_contract(state, ncontraq)

Here we build a random graph with randomly weighted edges. Note: MBE allows us to encode two vertices (typically two qubits) into a single qubit using the z and x-axes. If y-axis included, we can encode three vertices per qubit.

vertices1 = randint(2*nqubits, (nterms,), device=device) # randomly generated first qubits (vertices) of each two-qubit term (edge)

vertices2 = randint(2*nqubits, (nterms,), device=device) # randomly generated second qubits (vertices) of each two-qubit term (edge)

vertices2[vertices2==vertices1] += 1 # because qubits in this graph are randomly generated, eliminate self-interacting terms

vertices2[vertices2 >= nqubits] = 0

weights = rand((nterms,), device=device) # randomly generated edge weights

RotY1 = tlq.UnaryGatesUnitary(nqubits, ncontraq, device=device) #single-qubit rotations about the Y-axis

RotY2 = tlq.UnaryGatesUnitary(nqubits, ncontraq, device=device)

CZ0 = tlq.BinaryGatesUnitary(nqubits, ncontraq, tlq.cz(device=device), 0) # one controlled-z gate for each pair of qubits using even parity (even qubits control)

unitaries = [RotY1, CZ0, RotY2]

circuit = tlq.TTCircuit(unitaries, ncontraq, ncontral) # build TTCircuit using specified unitaries

opz, opx = tl.tensor([[1,0],[0,-1]], device=device), tl.tensor([[0,1],[1,0]], device=device) # measurement operators for MBE

opt = Adam(circuit.parameters(), lr=lr, amsgrad=True) # define PyTorch optimizer

loss_vec = tl.zeros(nepochs)

cut_vec = tl.zeros(nepochs)

for epoch in range(nepochs):

# TTCircuit forward pass computes expectation value of single-qubit pauli-z and pauli-x measurements

spinsz, spinsx = circuit.forward_single_qubit(state, opz, opx)

spins = cat((spinsz, spinsx))

nl_spins = tanh(spins) # apply non-linear activation function to measurement results

loss = tlq.calculate_cut(nl_spins, vertices1, vertices2, weights) # calculate the loss function using MBE

print('Relaxation (raw) loss at epoch ' + str(epoch) + ': ' + str(loss.item()) + '. \n')

with no_grad():

cut_vec[epoch] = tlq.calculate_cut(tl.sign(spins), vertices1, vertices2, weights, get_cut=True) #calculate the rounded MaxCut estimate (algorithm's result)

print('Rounded MaxCut value (algorithm\'s solution): ' + str(cut_vec[epoch]) + '. \n')

# PyTorch Autograd attends to backwards pass and parameter update

loss.backward()

opt.step()

opt.zero_grad()

loss_vec[epoch] = loss

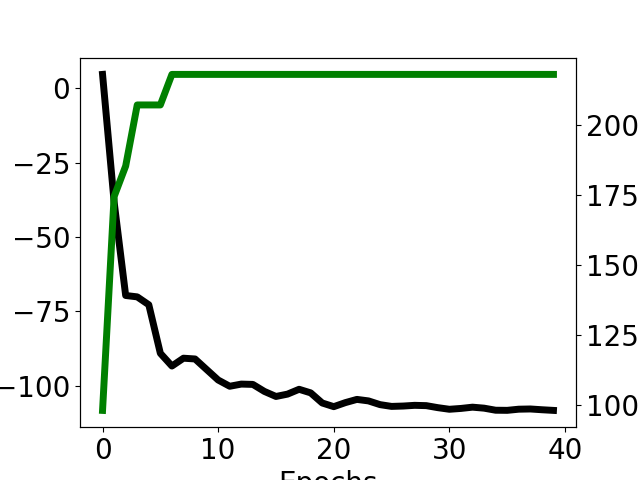

plt.rc('xtick', labelsize=20)

plt.rc('ytick', labelsize=20)

fig, ax1 = plt.subplots()

ax1.plot(loss_vec.detach().numpy(), color='k', linewidth=5)

ax2 = ax1.twinx()

ax2.plot(cut_vec.detach().numpy(), color='g', linewidth=5)

ax1.set_xlabel('Epochs', fontsize=20)

ax1.set_ylabel('Loss', fontsize=20, color='k')

ax2.set_ylabel('Cut', fontsize=20, color='g')

#ax1.set_xticks(fontsize=20)

#plt.yticks(fontsize=20)

plt.show()

Out:

Relaxation (raw) loss at epoch 0: 4.727077960968018.

Rounded MaxCut value (algorithm's solution): tensor(98.1748).

Relaxation (raw) loss at epoch 1: -38.129425048828125.

Rounded MaxCut value (algorithm's solution): tensor(174.5330).

Relaxation (raw) loss at epoch 2: -69.657470703125.

Rounded MaxCut value (algorithm's solution): tensor(185.4413).

Relaxation (raw) loss at epoch 3: -70.12276458740234.

Rounded MaxCut value (algorithm's solution): tensor(207.2579).

Relaxation (raw) loss at epoch 4: -72.77626037597656.

Rounded MaxCut value (algorithm's solution): tensor(207.2579).

Relaxation (raw) loss at epoch 5: -89.11137390136719.

Rounded MaxCut value (algorithm's solution): tensor(207.2579).

Relaxation (raw) loss at epoch 6: -93.39468383789062.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 7: -90.75611114501953.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 8: -91.01602935791016.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 9: -94.56245422363281.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 10: -98.09518432617188.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 11: -100.19799041748047.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 12: -99.46804809570312.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 13: -99.5732650756836.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 14: -101.93372344970703.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 15: -103.63218688964844.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 16: -102.83998107910156.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 17: -101.21794128417969.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 18: -102.41798400878906.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 19: -105.82402801513672.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 20: -107.06025695800781.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 21: -105.70433044433594.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 22: -104.61807250976562.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 23: -105.12229919433594.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 24: -106.37655639648438.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 25: -106.974365234375.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 26: -106.85466003417969.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 27: -106.61402130126953.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 28: -106.71804809570312.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 29: -107.39781951904297.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 30: -107.93941497802734.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 31: -107.65807342529297.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 32: -107.2333984375.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 33: -107.56609344482422.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 34: -108.2602767944336.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 35: -108.2996597290039.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 36: -107.91377258300781.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 37: -107.84062194824219.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 38: -108.09676361083984.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Relaxation (raw) loss at epoch 39: -108.31858825683594.

Rounded MaxCut value (algorithm's solution): tensor(218.1662).

Total running time of the script: ( 0 minutes 1.946 seconds)